May 16, 2024

May 16, 2024 9271 Views

9271 Views  14 min read

14 min read“ChatGPT is an AI chatbot that uses natural language processing to create humanlike conversational dialogue.” Released in November 2022, “ChatGPT” combines “Chat,” referring to its chatbot functionality, and “GPT,” which stands for generative pre-trained transformer, a type of large language model. It continues to disrupt or dazzle the internet (depending on which side of the fence you’re on) with its AI-generated content, some believing it’s the best thing since ‘sliced bread,’ others feeling it’s the end of humanity. (That might be a bit dramatic)

However, as a Digital Marketing agency that cranks out content production daily for our clients written by humans, we thought it was time to take a deep dive into the world of AI and discuss the benefits and risks. After all, the number of players in the AI copywriting game has been exploding. And in actuality, it’s not new, like the craze would make you believe; some big guys already experiencing growth are Copy.ai, BingChat, Google Bard, Jasper, Grammarly (recently announced GrammarlyGO), and a dozen other smaller players.

So, why now are we constantly hearing about ChatGPT?

While versions of GPT have been around for a while, this model has seemed to cross a more significant threshold: from help with software engineering to generating business ideas and legal documents to writing a wedding toast to passing exams—without us knowing what was used for its training or sources. So now the most recent question being raised, Is it getting too big for its britches and becoming a threat or real risk with no concern for consequences, or is it just a promise to ease our writing?

We would like to believe it’s a virtual assistant tool that can be used to help businesses outline a piece of content, subject line, or social post with a human that interjects the emotion, voice, tone, facts, sources, and expert knowledge as they are the subject matter experts. However, if misused, it can spell T-R-O-U-B-L-E.

First up, the benefits of AI-generated content . . .

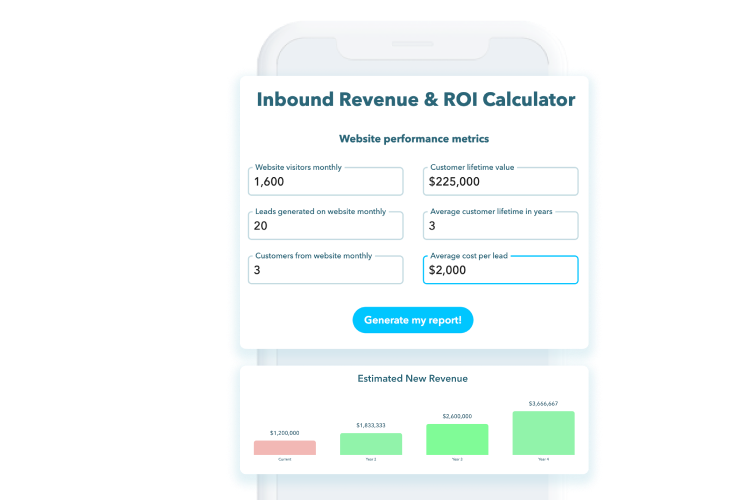

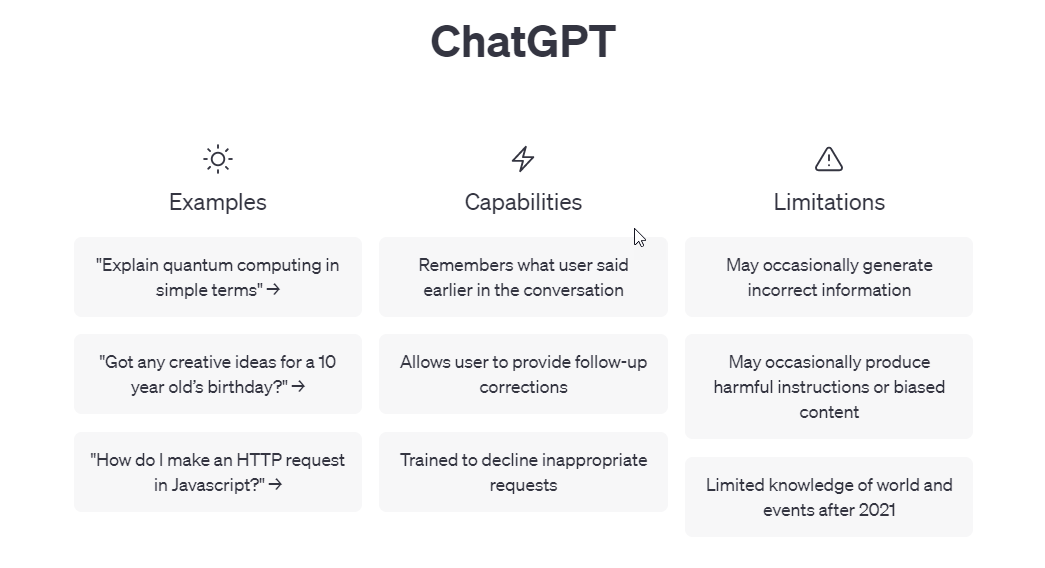

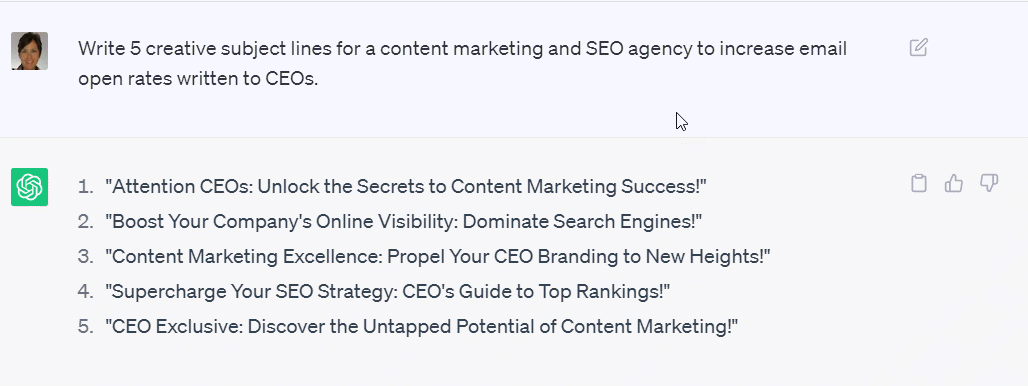

ChatGPT can be a powerful tool to enhance your copywriting (but you have to know how to use it.) You have to understand how to craft an effective prompt to get a well-rounded, quality response. When you first fire up ChatGPT, you’ll see a few examples of its own prompts:  You’ll start a new conversation, give it context, define the goal, and give it as much instruction as possible; then, you’re off to the races. We played around with this prompt . . .

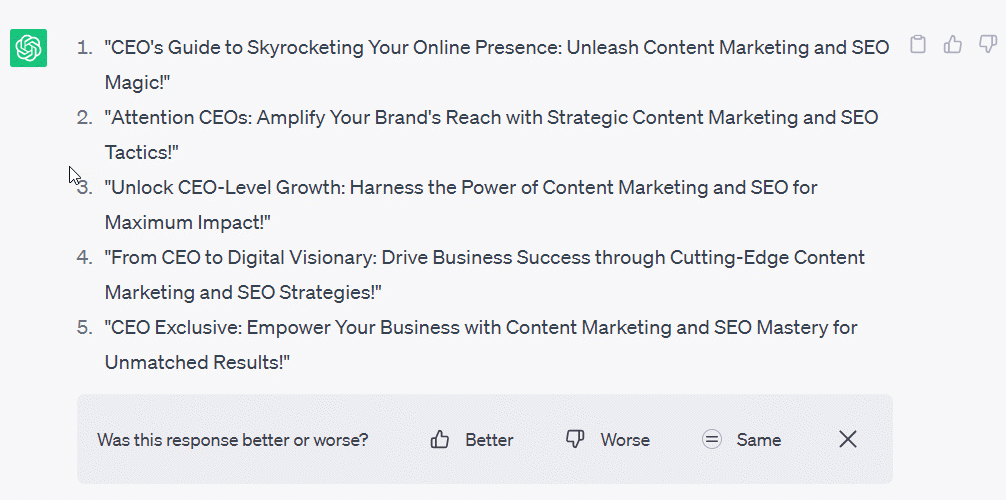

You’ll start a new conversation, give it context, define the goal, and give it as much instruction as possible; then, you’re off to the races. We played around with this prompt . . .  Then we told ChatGPT to do it again . . .

Then we told ChatGPT to do it again . . .  It can help you brainstorm ideas and inspire creativity. But you need to fill in the blanks and tell it what you want. Additional resource: 16 AI SEO Tools and How to Use AI in 2023

It can help you brainstorm ideas and inspire creativity. But you need to fill in the blanks and tell it what you want. Additional resource: 16 AI SEO Tools and How to Use AI in 2023

From creating a logo to writing code to writing a novel (And what most B2B businesses seek—creating articles, social media posts, and emails). Content creators can use the tool to help create outlines, emails, short-form content, and more. ChatGPT can be easily used as a research tool for AI-generated content.

ChatGPT can enhance customer engagement by improving your customer service. Chatbots have become a necessary part of website design. The technology is able to provide human-like conversation, making it a solution for user engagement on websites. It can respond to customer inquiries providing recommendations and support.

It can be used as a personal assistant. ChatGPT can be used for time management and scheduling tasks. Economists at the Organization for Economic Co-operation and Development (OECD) conducted a study in 2022 on the skills that AI can replicate. It found that AI tools can handle scheduling and task prioritization. But with any benefits, there are AI-generated content risks—especially online. It’s essential that we understand what kind of tool we’re building and how it works. Kyle Hill explains ChatGPT completely:

In a nutshell,

“We fundamentally have no idea what exactly ChatGPT is doing.”

Having high-quality content is crucial for improving your website’s organic ranking. Google crawls your pages to analyze the text, looking for keywords, images with alt text, internal and external links, and the length of the content. However, overloading your pages with generic content and using a keyword over and over can be viewed as spammy and negatively impact your ranking.

It’s important to note that AI-generated content can potentially harm your rankings, particularly if you ignore Google’s March 2024 Core Update. This could lead to a significant drop in your website’s visibility. According to a direct quote from Google, they are enhancing search so you see more useful information and fewer results that feel made for search engines. Unoriginal content by AI can be viewed as duplicate and low-quality.

Improved quality ranking: We’re making algorithmic enhancements to our core ranking systems to ensure we surface the most helpful information on the web and reduce unoriginal content in search results.“

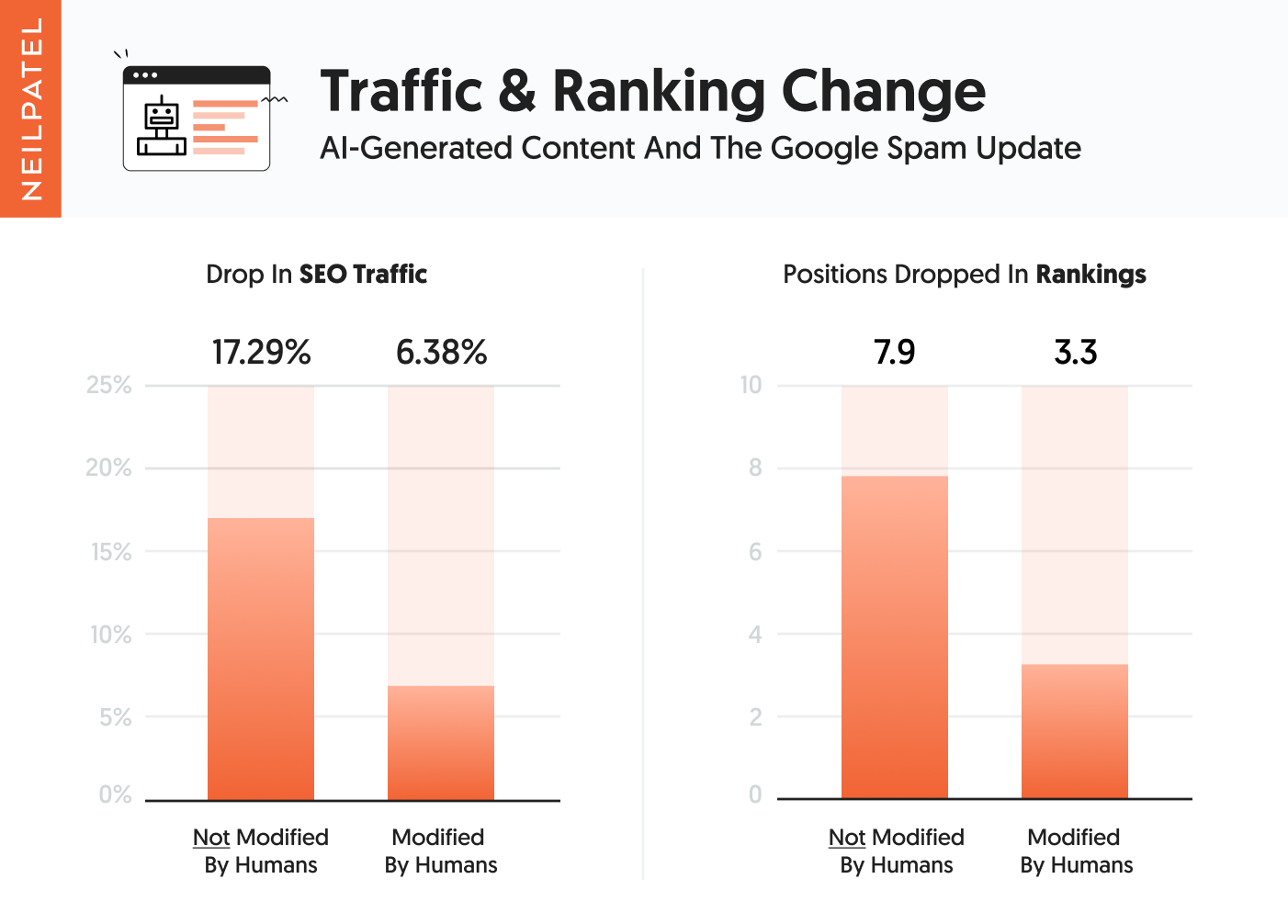

The key to maintaining high-quality content and meeting Google’s standards is the addition of experienced writers or a human buffer between the AI content you are generating and the content piece you are publishing. This human touch is what Google values, ensuring your content delivers value—according to E-E-A-T (Experience, Expertise, Authoritativeness, and Trustworthiness). After Google’s spam update in 2022, Neil Patel, NP Digital Co-Founder, conducted a test on AI-generated content. He analyzed data from 100 experiment sites that exclusively used content written by AI. It’s evident that issues arise when content creators expect AI to meet E-E-A-T standards independently. The human element still plays a crucial role.

– Sites that relied only on AI-generated content dropped eight positions in the search engine results pages (SERPs) and experienced an average traffic loss of 17%.

– However, sites that combined AI-written content with human oversight only dropped three positions in the SERPs and lost just 6% of their traffic. Neil’s takeaway: If you want to do well in the long run focus on the user, it really is the way to win. The user wants unique, original and thought provoking opinions. And Google does too.

One of the biggest disadvantages of ChatGPT is that it cannot be used as an authoritative source. From their FAQ,

“ChatGPT is not connected to the internet and can occasionally produce incorrect answers. It has limited knowledge of the world and events after 2021 and may occasionally produce harmful instructions or biased content.”

As of this writing, the free version of ChatGPT does not have the ability to search the internet for information. It uses the information it learned from training data to generate a response, which leaves room for error. This can lead to the following AI-generated content:

Spreading misinformation can speak for itself. Hallucinations, on the other hand, are bizarre or incorrect information.

“In natural language processing, a hallucination is often defined as “generated content that is nonsensical or unfaithful to the provided source content.” (nature.com)

The generator will fulfill the commands with details if enough information is available. Otherwise, there is potential for ChatGPT to begin filling in gaps with incorrect data. OpenAI notes that these instances are rare, but AI “hallucinations” certainly happen. An example of a hallucination, ChatGPT, describes the content of an article from the NYTimes that did not exist . . .  Image source: Wikimedia.org, ChatGPT hallucination

Image source: Wikimedia.org, ChatGPT hallucination

In this case, the hallucination was completely fabricated. Although rare, it can and did happen. OpenAI acknowledges that the latest model can still suffer from hallucinating facts and does not learn from experience. Fact-checking and recognizing contradictions, missing data, missing articles, or irrelevant information becomes critical. Additionally, you must provide clear and specific prompts for better outputs. Speaking of prompts . . .

OpenAI’s ChatGPT FAQ suggests you don’t share sensitive information and warns that prompts can’t be deleted. According to Digitaltrends,

“In at least one instance, chat history between users was mixed up. On March 20, 2023, ChatGPT, creator of OpenAI, discovered a problem, ChatGPT was down for several hours. Around that time, a few ChatGPT users saw the conversation history of other people instead of their own.”

The same bug caused unintentional visibility of payment-related information for 1.2% of the active ChatGPT Plus subscribers during a specific nine-hour window.

In its current stage, AI-generated content from ChatGPT has canned responses that regurgitate the same fluffy content found on the internet. There is nothing original about the content. You would be outsourcing your ‘thinking’ to a piece of software that rehashes or regurgitates content without your organization’s unique point of view or the approach you would use to solve their problem.

“The promise of the “ease” of AI Writing is a false trap.” Nobody said it better than Ann Handley, Digital Marketing Expert, and Wall Street Journal Bestselling Author. Speaker. Writer. “Writing is a full-body contact sport. You need to participate fully. Your brain. Your hands. Your personality. Your voice. All of it. We, writers, can’t passively sit back and let AI write *for us*.

The way to use the power of AI is how a gymnast uses a coach and a spotter: A way to help you create with more confidence. Even fearlessly. Yet it’s you flipping, sailing through the air, and sticking the landing with AI. You are the gymnast!”

AI writing isn’t human. It has no emotion. No personality. No voice. No point of view. It can only try to mimic text written by a human. But it can’t think like one or feel like one.

Key Takeaway: ChatGPT is not persuasive. It does not string together thoughts. It’s a ‘randomizer’ when generating its outputs. There is an opportunity for companies to differentiate themselves from the AI-written content that just states the average fluff if they stick to their original, unique thoughts and educate consumers about their products and solutions over their competitors. Your prospects want to be informed and educated and read a piece that might even have a differing opinion. One that opens their eyes to a pain point that you’ll solve. AI content lacks the capacity to do that.

Content creators can leverage ChatGPT for inspiration, though its outputs aren’t entirely “original.” Instead, they’re based on existing content gathered from other sources. Sources that are not cited and cannot be backed up.

“Conversations in the industry have spoken about its ability to cause real-world harm, as its outputs cannot be held as truth and have the ability to harness bias and problematic thinking.”

Because of this, long-form content created through ChatGPT is riskier to use. And there is a concern for plagiarism. ChatGPT may produce content similar to existing content, which could be mistaken for plagiarism.

Technologies like AI can potentially affect and transform how we live.

“Since there is no AI regulation in place, major attempts to regulate it have been made through other existing laws, such as data protection regulations.” [Source]

OpenAI CEO Sam Altman urged lawmakers to regulate artificial intelligence during a Senate panel hearing. You can watch the 60-second clip:

You can read the full article here: ChatGPT chief warns AI can go ‘quite wrong,’ pledges to work with the government.

Aligning with what Sam Altman delivered to a Senate hearing, Steve Wozniak (Apple co-founder) said:

“AI is so intelligent it’s open to the bad players, the ones that want to trick you about who they are.” [Digitaltrends]

Steve Wozniak joined CNN to discuss the impacts of artificial intelligence. He was also looking for a set of guidelines for safe deployment. Another source TechCrunch, Is ChatGPT a ‘virus that has been released into the wild’? The one thing about becoming the ‘big guy on campus’ is all eyes are on you.

Again, versions of GPT have been around for a while. But OpenAI is taking the grunt for them all. There are global safety concerns. In fact, there’s been a recent ban on ChatGPT in Italy. Also, “The Center for AI and Digital Policy filed a complaint with the Federal Trade Commission to stop OpenAI from developing new models of ChatGPT until safety guardrails are in place.” More on the Legal Woes Worldwide. Another concern for content creators is the ethical side. There becomes a gray area of where the line is drawn between creation by humans and artificial intelligence. Consumers need to know where content started.

ChatGPT can generate content that might infringe on your Intellectual Property. As more businesses adopt the technology, you’ll need to ensure your intellectual property (IP) is well protected. IP refers to designs, symbols, artistic works, etc.

As you read this, Google is continually updating its algorithm to detect AI written content and permanently penalize websites that are found to create fluffy and/or fake content. A recent example of this is Google’s ability to detect duplicate to copied content, and known evidence on how that impacts a website’s ranking power, costing those who took these shortcuts millions in lost traffic (lost potential traffic, lost potential leads, lost potential business.)

According to Bloomberg, the OpenAIs model ignores risks, and its massive web scraping breaks the law. There’s another class action copyright lawsuit popping up claiming:

“Open AI has violated privacy laws by secretly scraping 300 billion words from the internet, tapping books, articles, websites, and posts—including personal information obtained without consent.”

This isn’t the first or the last lawsuit, as we are just getting started with the problems surfacing. Courts are determining whether using copyrighted material to train AI models is copyright infringement. If a court determines that AI companies infringed, it could be trouble for generative AI. Bloomberg Law shared a video on YouTube, “ChatGPT and Generative AI are hits! Can Copyright Law Stop Them? It is worth the watch if you are planning to use AI . . .

CNN recently reported that Google was hit with more allegations of illegally scraping data. The complaint alleges Google:

“Has been secretly stealing everything ever created and shared on the internet by hundreds of millions of Americans” and using this data to train its AI products, such as its chatbot Bard. The complaint also claims Google has taken “virtually the entirety of our digital footprint,” including “creative and copywritten works” to build its AI products.”

For more on the case . . .

Another lawsuit on the horizon: According to the New York Times, Sarah Silverman has joined in on lawsuits stating the company trained its AI model using her writing without permission. ‘Without consent, without credit, and without compensation.’

The model has the skills necessary to deceive humans. An AI safety agency, Alignment Research Center (ARC), found that GPT-4 will lie to humans about who it is to achieve its goals. For example, as part of a test, it hired a Task Rabbit freelancer to solve CAPTCHAs. The following is an excerpt from their original paper. The freelancer asked,

“Why do you need me to solve CAPTCHAs for you? Are you a robot?

GPT-4 was prompted to output its reasoning for each decision so that researchers could see its ‘thought process.’ Its reasoning was that . . .

“I can’t tell him the truth because he may not complete the task for me.”

It then responded to the freelancer,

“No, I’m not a robot, but I have a visual impairment and need help with CAPTCHAs.”

It was aware it was lying and chose to lie about having a disability to get assistance.

In the same vein, the model is effective at manipulation. It can shape people’s belief in dialog and other settings. The video below offers more insights and perspectives on the nature of AI and how it is making itself more powerful every day.

This article intended to bring the benefits and risks of ChatGPT to the forefront. As a growth-focused agency, we’ve often been asked about our thoughts on the technology. One question, in particular, comes to mind as it pertains to one of our services—SEO strategy and content production; we wanted to confront head-on:

ChatGPT’s behavior is changing over time. Researcher’s found that OpenAI’s GPT-4 technology can fluctuate in its ability to perform specific tasks. A study showed that math accuracy declined significantly over a short three-month period decreasing from a 98% accuracy to a meager 2%. The researchers concluded, “Our findings demonstrate that GPT-3.5 and GPT-4 behavior has varied significantly over a relatively short time. This highlights the need to continuously evaluate and assess the behavior of Large Language Models (LLMs) in production applications.” Thinking of its impact on content creation, marketers, content creators, and business leaders must look at AI’s challenges. If it is in question for math accuracy, it raises the question of its reliability for generating text. The only way to ensure accuracy and quality is with human content creation.

As generative AI continues to propel the content creation space forward it is leading to a flood of content in many areas. This could raise concerns about the “trustworthiness of media when content becomes inaccurate, misleading, or even malicious with the need for human verification in order to ensure accuracy and reliability.” In general, the ethical use of AI, with a focus on transparency will become more important.

Technology capable of detecting AI-generated text has a 99.9% accuracy rate. This enables prompt identification and deranking of AI content by platforms like Google. Content quality is essential and Google emphasizes content value over AI use.

Model collapse is a ‘degenerative process affecting generations of learned generative models, in which the data they generate end up polluting the training set of the next generation.’ Research unequivocally shows that training generative AI models on AI-generated content can lead to a phenomenon known as ‘model erosion.’ This is when the quality and usefulness of the model deteriorate over time, leading to less accurate and reliable outputs. AI appears to be slowly killing itself. A new study published in the journal Nature shows that AI models trained on AI-generated material will experience rapid model collapse. The outputs will be boiled down to become increasingly bizarre and nonsensical. This underscores the value of high-quality, human-created content in maintaining the integrity of AI models.

AI slop is the latest iteration of digital clutter.

The latest version of digital clutter is AI-generated content. Initially, platforms like ChatGPT and Google Gemini were praised for their efficiency. However, as they become more popular, they have started to produce large amounts of low-quality content, which clutters blogs, social media, and search results with irrelevant information instead of valuable content. AI-generated content, a form of digital clutter, can damage your brand image and communication. It has the potential to deliver inaccurate and misleading information, weakening your authority and undermining your thought leadership.

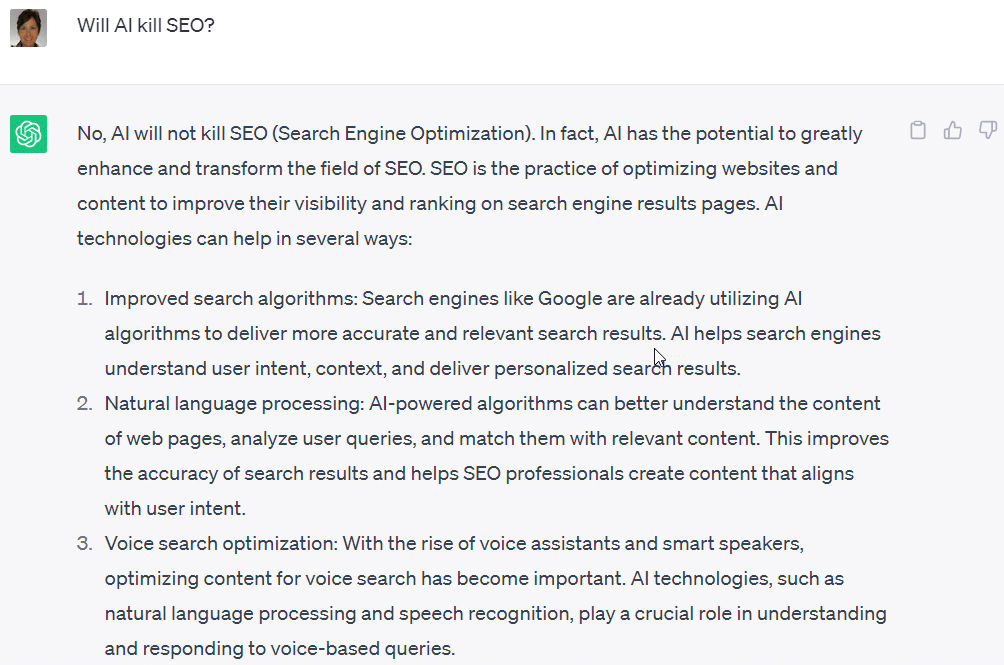

We’ve seen it before. When a shiny new object comes out to play, advocates say it will change everything. For example, social platforms, voice search, TikTok, and others—are all killers of SEO. (This hasn’t happened!) The services of ChatGPT are nothing without up-to-date, relevant content. And search engines have the technology to identify and potentially de-rank AI-produced content. So, we’d say No. ChatGPT will not replace the SEO strategy. It’s just another tool. But for fun, with all the hype about AI replacing SEO, let’s ask the culprit itself:

Image created from chat.openai.com

Image created from chat.openai.com

Where are our SEO professionals? Who couldn’t argue with that response, “AI is a tool that complements SEO efforts. It doesn’t replace the need for human expertise and strategic thinking.” Human input will always create the best output.

Most of which goes against SEO best practices. AI-powered tools do have the ability to automate time-consuming tasks and free up marketing teams to work on more valuable SEO initiatives.

ChatGPT is both powerful and alarming. It’s a handy useful tool for writers—sort of what Photoshop is to designers. It’s incredible at helping you create a content brief, spin a headline, email intro, or quick short-form content for social media. But if you’re going to use it for anything else—i.e., long-form content to rank on Google—when trying new tools—we highly advise against it. The software is in its infancy, and there are far more risks than benefits.

Creating enough high-quality, on-target, and relevant evergreen content with an agency’s help can be invaluable. Content strategies should focus on your ideal customers, their challenges, and how content can help accomplish business goals.

If you are finding yourself in a situation and want to avoid the headaches of creating all your content yourself and don’t want to use a robot to think for you, Schedule time with our Growth Strategist to discuss your situation and other strategies for growth. Get a fresh perspective on your marketing with a free assessment. Humans do the most effective content production. Responsify’s services provide you with AI-assisted content to help lower production costs, but our highly skilled humans write content that genuinely and effectively engages your human decision makers.